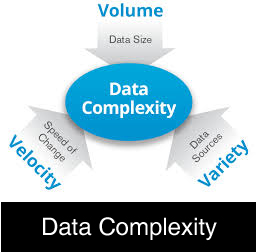

Big data is a large amount of structured and unstructured data that grows exponentially. This data is difficult to control using database management tools and traditional data processing tools. Big Data is important in industries as large amount of data lead to better decision making. It can also be used for gathering potential customers. Let us consider for example you are posting something as “I am planning to go Goa on this weekend” on social networking site like Facebook. Then you are populating cyberspace with these bits of data. Facebook then collects this information and send it to corporation who then use this information to collect potential customers. Next day you get coupon from Make my trip for Goa package. Big data is dependent on 3 V’s and they are ‘Volume, Velocity and Variety’.

Volume– Volume basically refers to large data. Data volume increases due to variety of reasons such as the data which flows continuously due to social media or due to any transaction that takes place. For example Facebook handles 40 million photos from its user base or Walmart handles 1 million customer transactions per hour which leads to increase in data volume. At first large data volume was a big issue but due to reducing storage cost it is not a big matter.

Velocity– Data is flowing at high speed and to have control over this real time data which is flowing at high speed RFID tags and sensors are used. To give response to such high speed data is big issue in most of the organization.

Variety– Data comes in two type of formats and they are- Structured data and Unstructured data. Structured data is the data in database and unstructured data is audio, video, image, email, documents and others.

Big Data implementation is based on MapReduce framework. This framework can process huge amount of data. In this framework queries are split and distributed across parallel nodes. These distributed queries are then processed in parallel across parallel nodes. The results are then gathered and delivered. MapReduce has advantages such as automatic parallelization, runtime partitioning, task scheduling, handling machine failure. This framework was proposed by Google. This architecture is used by Yahoo, Facebook, Amazon and list is growing.

Technologies used in Big Data-

Hadoop– Hadoop is an open source platform for handling Big Data. It is used for large scale processing and can work with multiple data sources. Hadoop is used for handling data of social media, data of weather report, etc.

Hive– Hive was originally developed by Facebook . This technology is higher level abstraction of Hadoop framework. Hive uses “SQL-like” language that allows for query execution over data stored in Hadoop cluster.

PIG– PIG was developed by Yahoo. It is an open source platform. This technology is similar to Hive but PIG uses “PERL-like” language for query execution over data stored in Hadoop cluster.

SkyTree– SkyTree is another platform used in handling Big Data. It has high performance and it is machine learning platform which make it efficient as handling data manually or through automatically is very difficult and expensive.

There are other platform such as PLATFORA and WibiData which are also used for handling Big Data.